About me

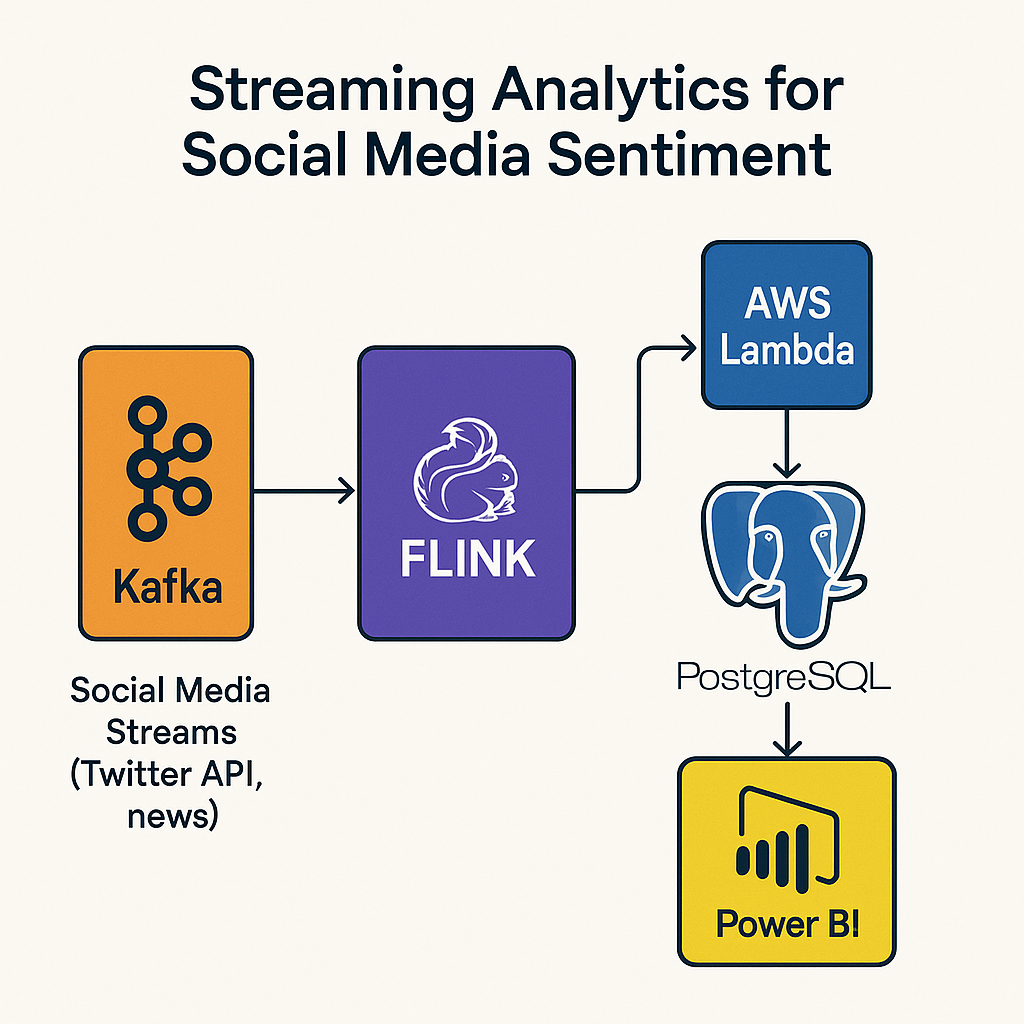

AWS Certified Data Engineer with around 4 years of experience in designing scalable data pipelines and cloud-based solutions. Skilled in using Python, AWS, and big data technologies like Apache Spark and Kafka to build efficient, high-performance data processing systems.

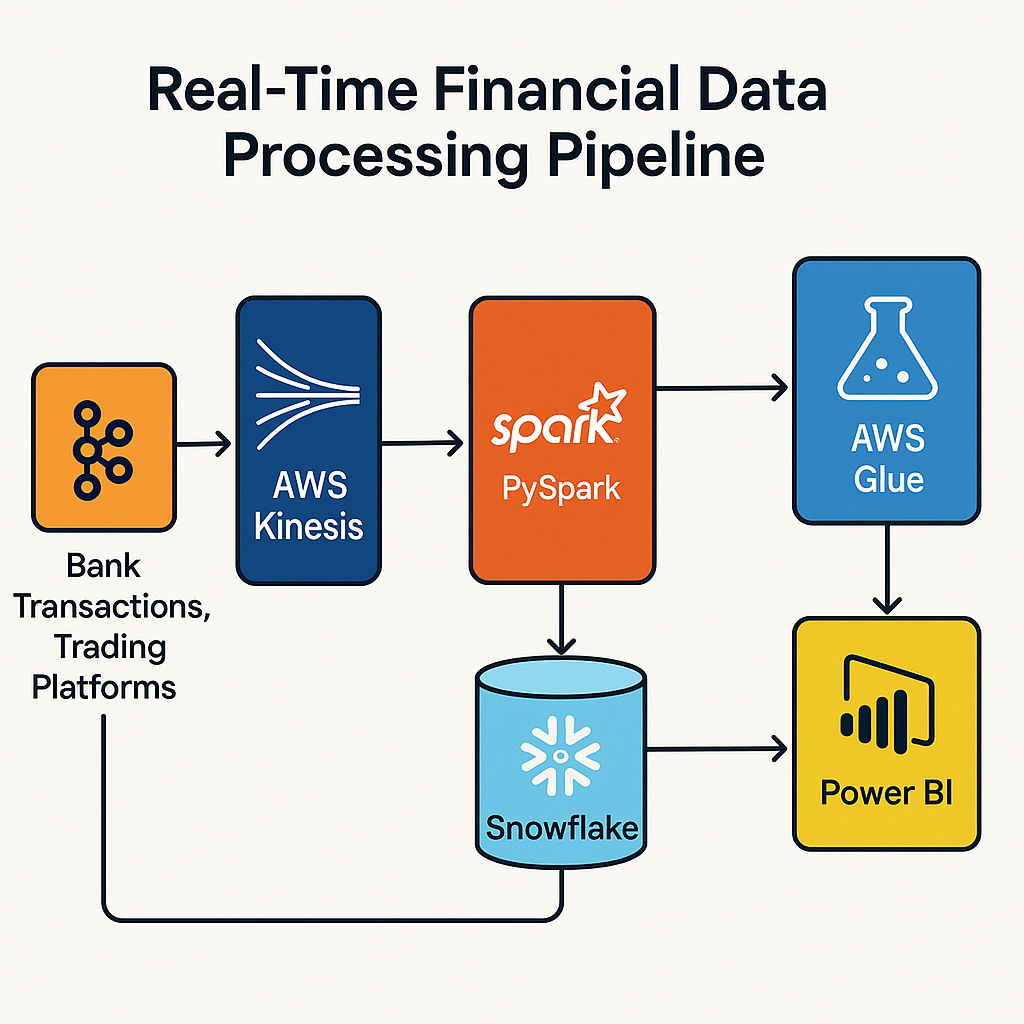

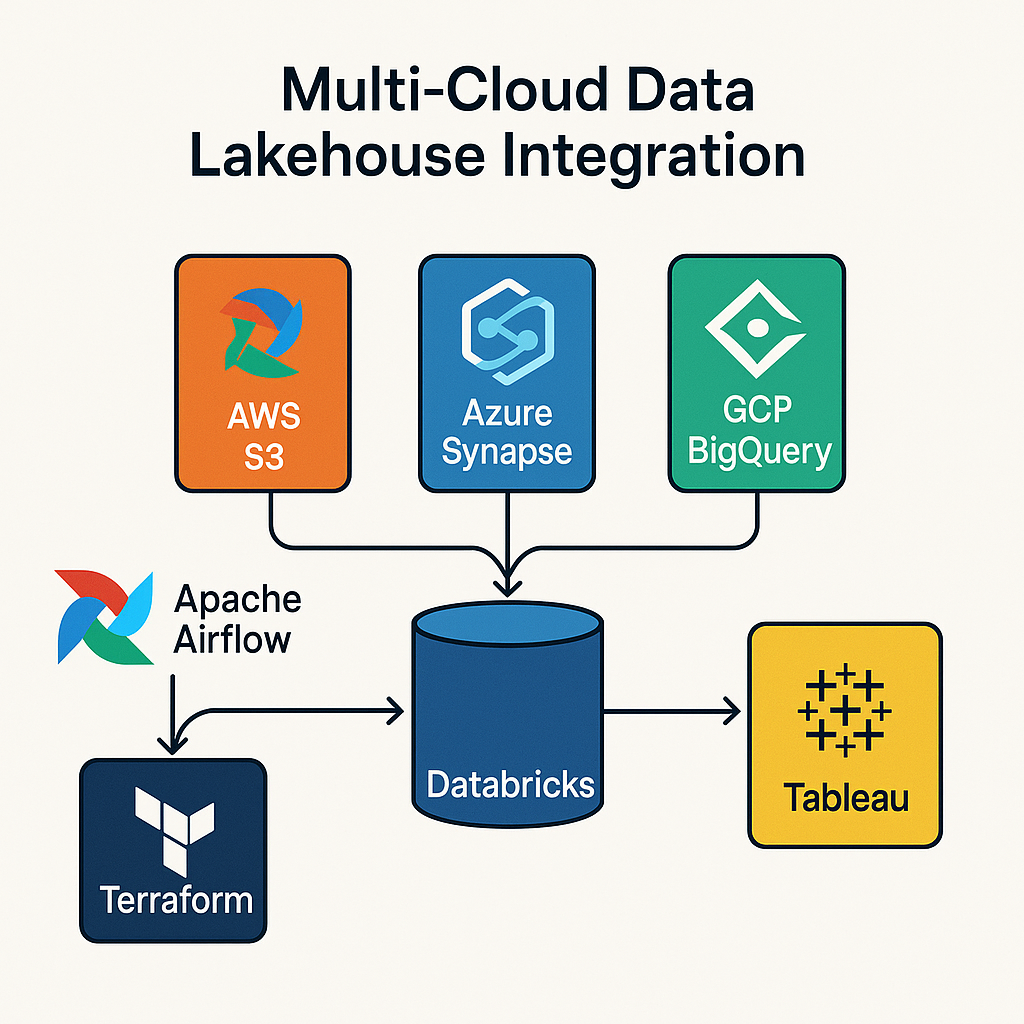

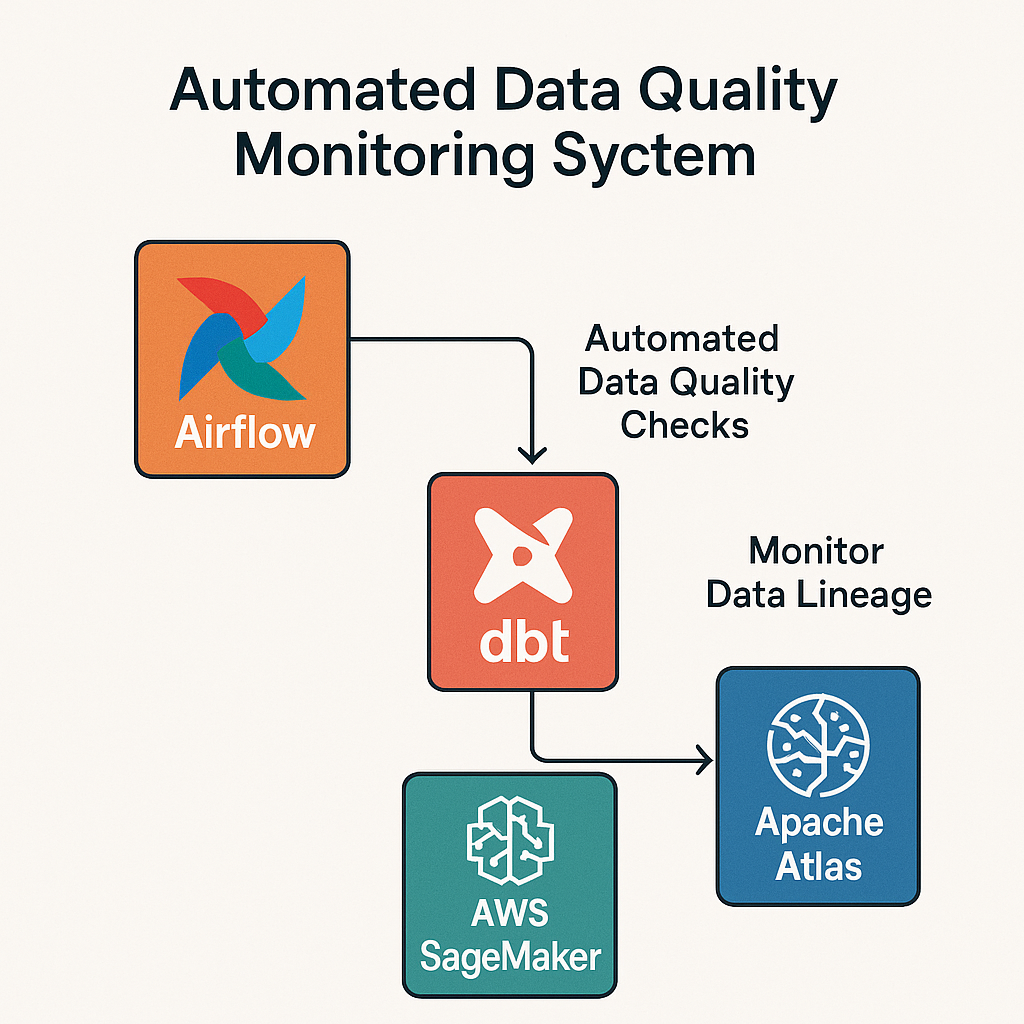

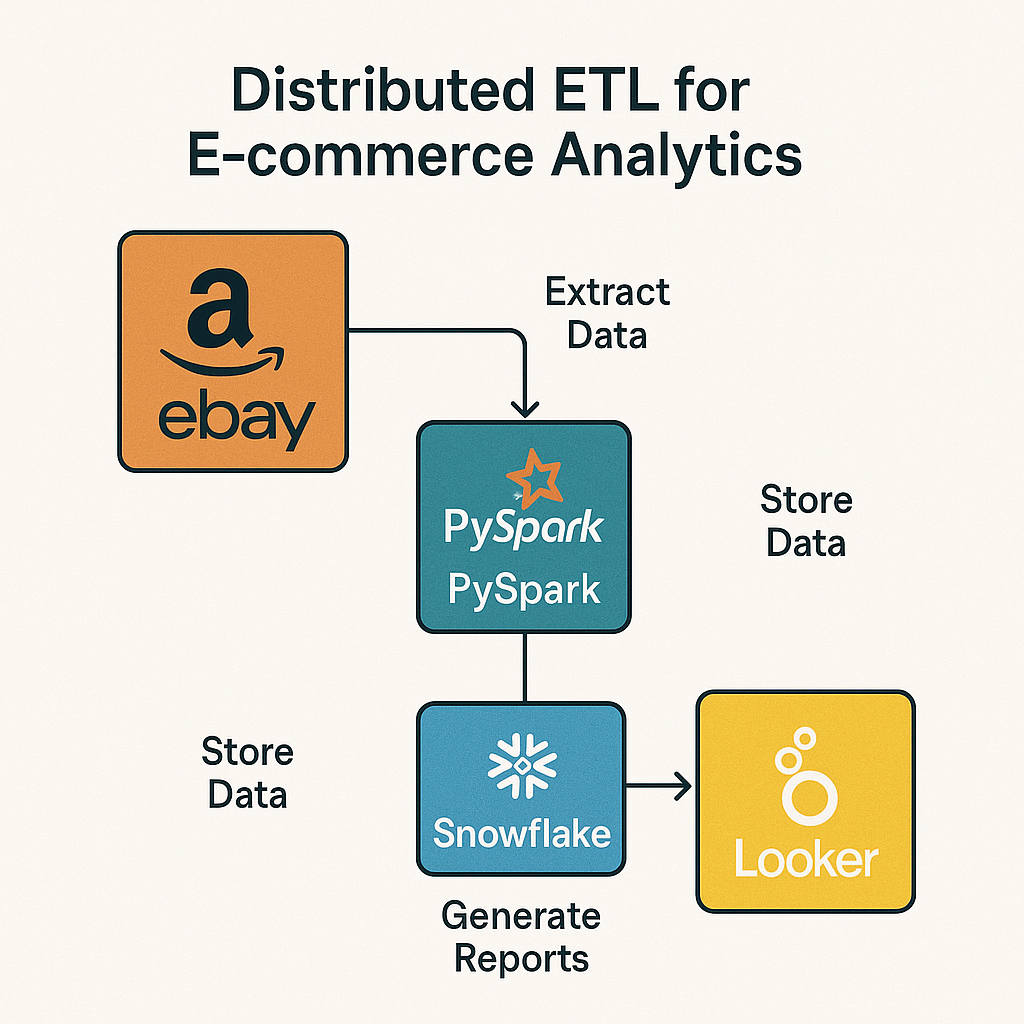

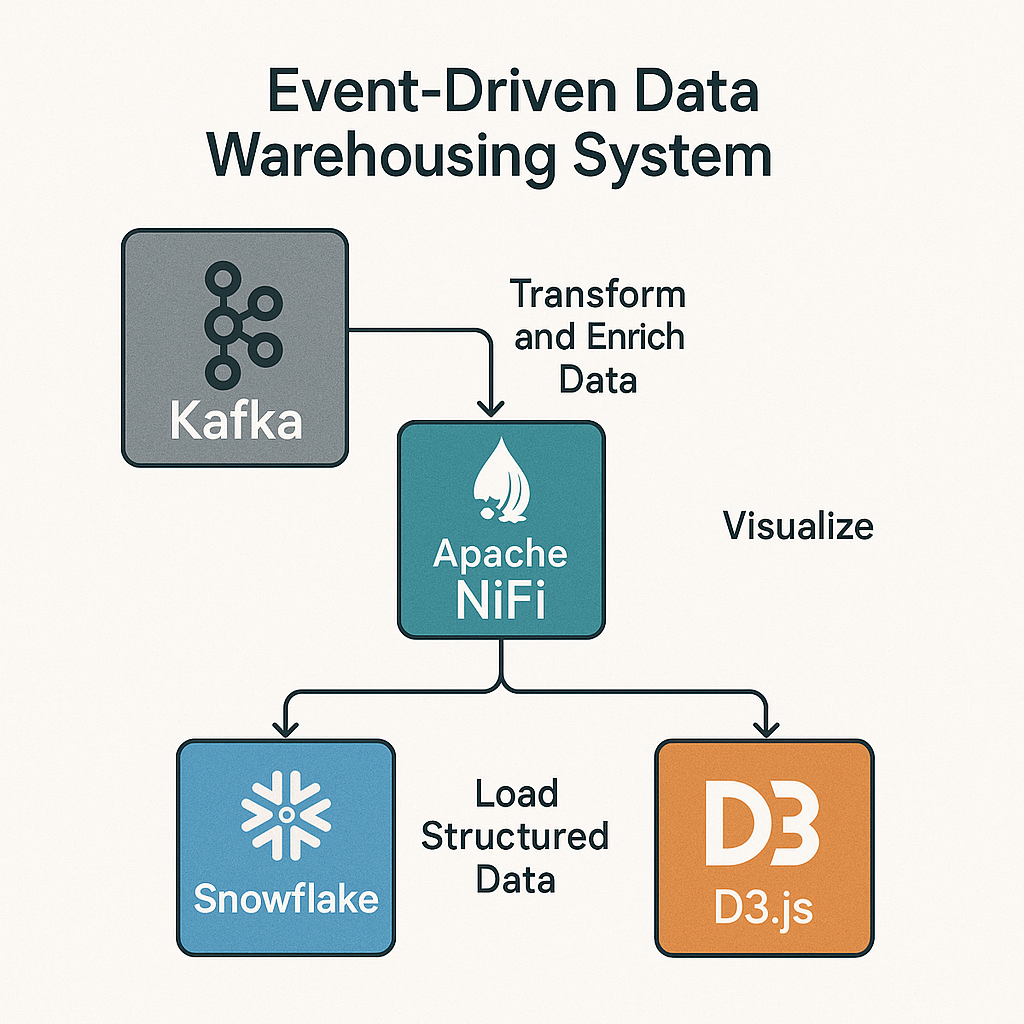

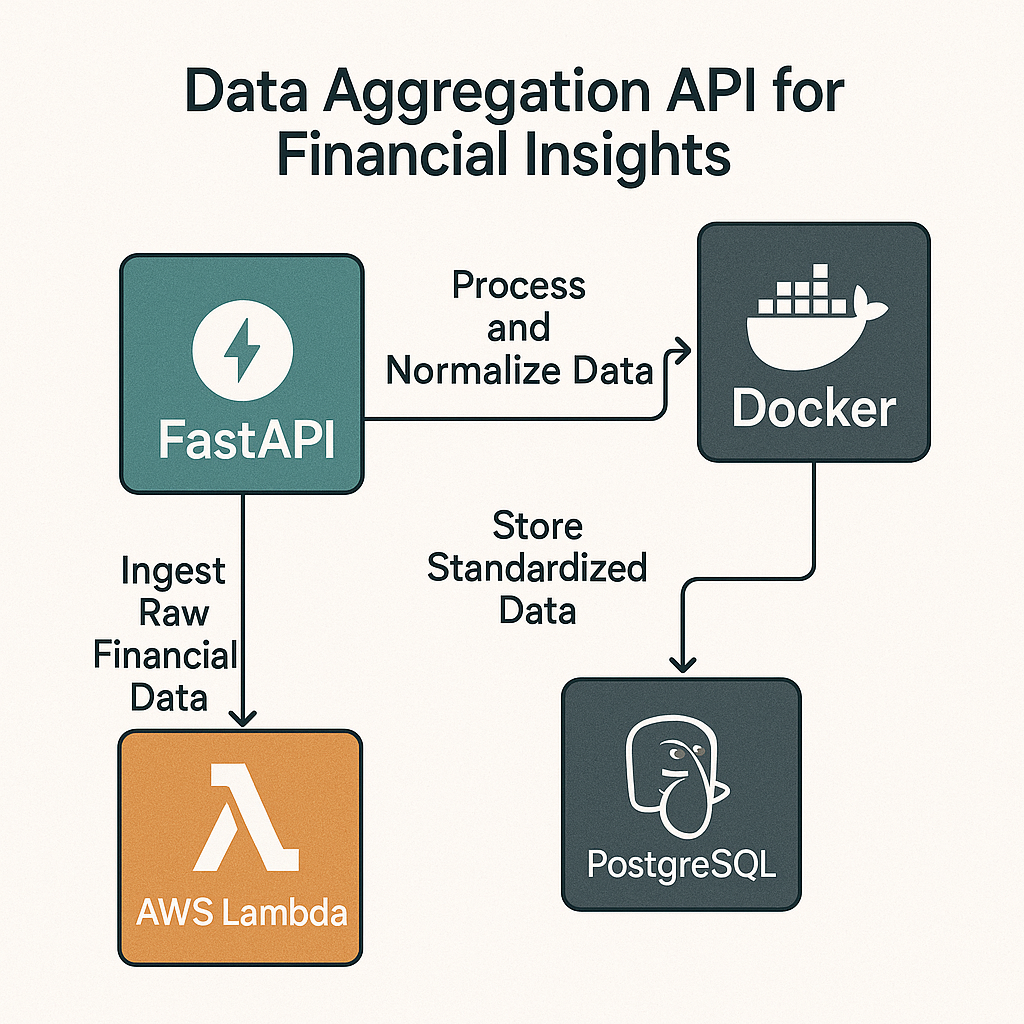

Proficient in implementing ETL workflows, real-time data streaming, and automated data integration across multi-cloud environments. Experienced in leveraging AWS Glue, Snowflake, and Airflow to optimize data pipelines and ensure seamless data flow.

Passionate about improving data accessibility and operational efficiency through automation and robust architecture. Proven track record of reducing processing times, enhancing data quality, and enabling data-driven insights.

What i'm doing

-

Programming and Scripting

Writing efficient code for data manipulation, pipeline automation, and ETL tasks.

-

Data Pipelines and ETL Tools

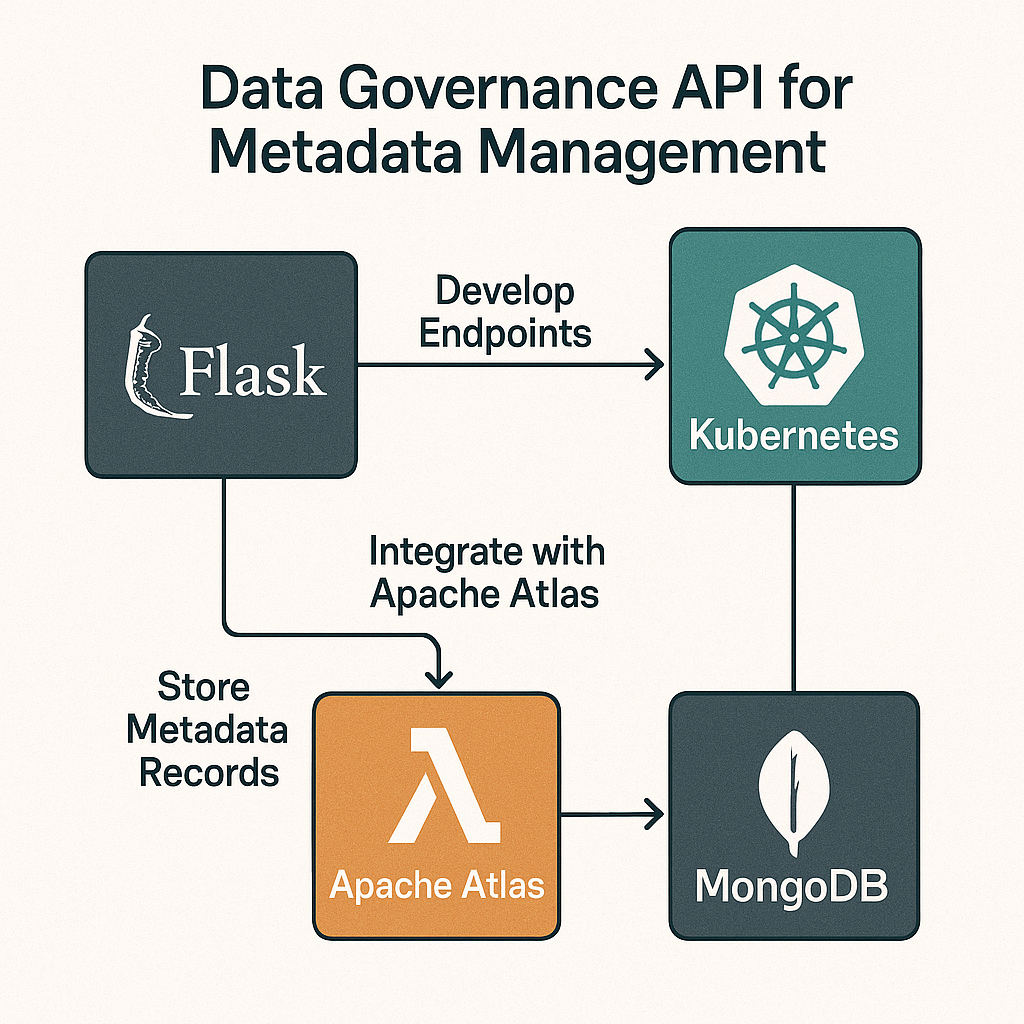

Building and orchestrating data workflows to extract, transform, and load data.

-

Data Warehousing and Storage

Managing structured and unstructured data in scalable, high-performance storage solutions.

-

Cloud Platforms and Tools

Leveraging cloud infrastructure to build, deploy, and maintain data processing applications.

Recommendations